http://aamulehdenblogit.ning.com/profiles/blogs/myytti-konesuper-lyst-poksahtaa

https://backchannel.com/the-myth-of-a-superhuman-ai-59282b686c62

I’ve heard that in the future computerized AIs will become so much smarter than us that they will take all our jobs and resources, and humans will go extinct. Is this true?

That’s the most common question I get whenever I give a talk about AI. The questio- ners are earnest; their worry stems in part from some experts who are asking them- selves the same thing.These folks are some of the smartest people alive today, such as Stephen Hawking, Elon Musk,Max Tegmark,Sam Harris, and Bill Gates, and they believe this scenario very likely could be true. Recently at a conference convened to discuss these AI issues, a panel of nine of the most informed gurus on AI all agreed this superhuman intelligence was inevitable and not far away.

Follow Backchannel: Facebook | Twitter

Yet buried in this scenario of a takeover of superhuman artificial intelligence are five assumptions which, when examined closely, are not based on any evidence. These claims might be true in the future, but there is no evidence to date to support them. The assumptions behind a superhuman intelligence arising soon are:

- Artificial intelligence is already getting smarter than us, at an exponential rate.

- We’ll make AIs into a general purpose intelligence, like our own.

- We can make human intelligence in silicon.

- Intelligence can be expanded without limit.

- Once we have exploding superintelligence it can solve most of our problems.

In contradistinction to this orthodoxy, I find the following five heresies to have more evidence to support them.

- Intelligence is not a single dimension, so “smarter than humans” is a meaningless concept.

- Humans do not have general purpose minds, and neither will AIs.

- Emulation of human thinking in other media will be constrained by cost.

- Dimensions of intelligence are not infinite.

- Intelligences are only one factor in progress.

If the expectation of a superhuman AI takeover is built on five key assumptions that have no basis in evidence,then this idea is more akin to a religious belief - a myth. In the following paragraphs I expand my evidence for each of these five counter-assumptions, and make the case that, indeed, a superhuman AI is a kind of myth.

1.

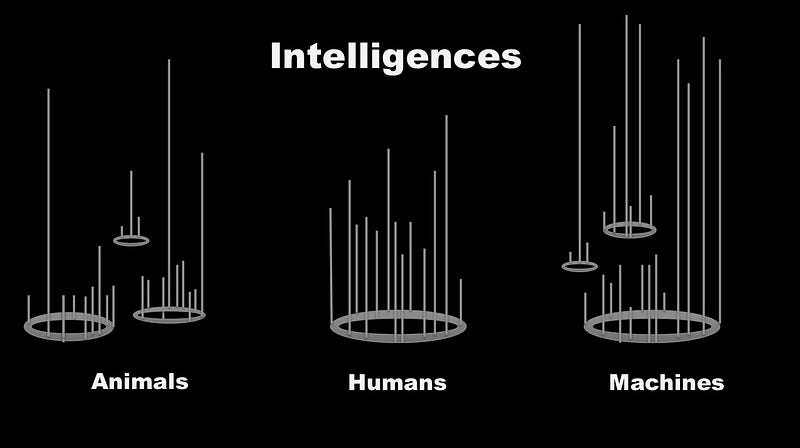

The most common misconception about artificial intelligence begins with the common misconception about natural intelligence. This misconception is that intelli- gence is a single dimension.Most technical people tend to graph intelligence the way Nick Bostrom does in his book, Superintelligence - as a literal, single-dimension, li- near graph of increasing amplitude. At one end is the low intelligence of, say, a small animal; at the other end is the high intelligence, of, say, a genius - almost as if intelli- gence were a sound level in decibels. Of course, it is then very easy to imagine the extension so that the loudness of intelligence continues to grow,eventually to exceed our own high intelligence and become a super-loud intelligence - a roar! - way beyond us, and maybe even off the chart.

This model is topologically equivalent to a ladder, so that each rung of intelligence is a step higher than the one before. Inferior animals are situated on lower rungs below us, while higher-level intelligence AIs will inevitably overstep us onto higher rungs. Time scales of when it happens are not important; what is important is the ranking — the metric of increasing intelligence.

The problem with this model is that it is mythical,like the ladder of evolution. The pre- Darwinian view of the natural world supposed a ladder of being, with inferior animals residing on rungs below human. Even post-Darwin,a very common notion is the “lad- der” of evolution, with fish evolving into reptiles, then up a step into mammals,up into primates, into humans, each one a little more evolved (and of course smarter) than the one before it. So the ladder of intelligence parallels the ladder of existence. But both of these models supply a thoroughly unscientific view.

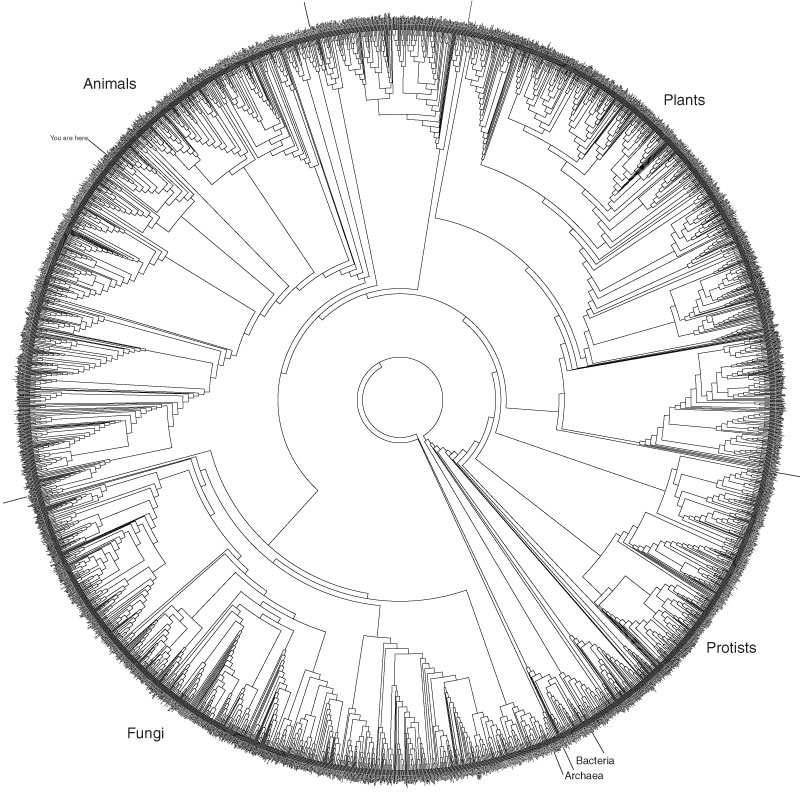

A more accurate chart of the natural evolution of species is a disk radiating outward, like this one (above) first devised by David Hillis at the University of Texas and based on DNA. This deep genealogy mandala begins in the middle with the most primeval life forms, and then branches outward in time.Time moves outward so that the most recent species of life living on the planet today form the perimeter of the circumfe- rence of this circle. This picture emphasizes a fundamental fact of evolution that is hard to appreciate: Every species alive today is equally evolved.Humans exist on this outer ring alongside cockroaches, clams, ferns, foxes, and bacteria. Every one of these species has undergone an unbroken chain of three billion years of successful reproduction, which means that bacteria and cockroaches today are as highly evolved as humans. There is no ladder.

Likewise, there is no ladder of intelligence. Intelligence is not a single dimension. It is a complex of many types and modes of cognition, each one a continuum. Let’s take the very simple task of measuring animal intelligence. If intelligence were a single di- mension we should be able to arrange the intelligences of a parrot,a dolphin,a horse, a squirrel, an octopus, a blue whale,a cat,and a gorilla in the correct ascending order in a line.We currently have no scientific evidence of such a line.One reason might be that there is no difference between animal intelligences,but we don’t see that either. Zoology is full of remarkable differences in how animals think. But maybe they all have the same relative “general intelligence?” It could be, but we have no measure- ment, no single metric for that intelligence.Instead we have many different metrics for many different types of cognition.

Generated via Emergent Mind

Instead of a single decibel line, a more accurate model for intelligence is to chart its possibility space, like the above rendering of possible forms created by an algorithm written by Richard Dawkins.Intelligence is a combinatorial continuum. Multiple nodes, each node a continuum create complexes of high diversity in high dimensions. Some intelligences may be very complex, with many sub-nodes of thinking. Others may be simpler but more extreme,off in a corner of the space.These complexes we call intel- ligences might be thought of as symphonies comprising many types of instruments. They vary not only in loudness, but also in pitch, melody, color, tempo, and so on. We could think of them as ecosystem. And in that sense, the different component nodes of thinking are co-dependent, and co-created.

Human minds are societies of minds, in the words of Marvin Minsky. We run on eco- systems of thinking. We contain multiple species of cognition that do many types of thinking: deduction, induction, symbolic reasoning, emotional intelligence, spacial logic, short-term memory, and long-term memory. The entire nervous system in our gut is also a type of brain with its own mode of cognition. We don’t really think with just our brain; rather, we think with our whole bodies.

These suites of cognition vary between individuals and between species. A squirrel can remember the exact location of several thousand acorns for years, a feat that blows human minds away. So in that one type of cognition,squirrels exceed humans. That superpower is bundled with some other modes that are dim compared to ours in order to produce a squirrel mind. There are many other specific feats of cognition in the animal kingdom that are superior to humans, again bundled into different systems.

Likewise in AI. Artificial minds already exceed humans in certain dimensions. Your calculator is a genius in math; Google’s memory is already beyond our own in a cer- tain dimension. We are engineering AIs to excel in specific modes. Some of these modes are things we can do, but they can do better, such as probability or math. Others are type of thinking we can’t do at all - memorize every single word on six billion web pages,a feat any search engine can do. In the future, we will invent whole new modes of cognition that don’t exist in us and don’t exist anywhere in biology. When we invented artificial flying we were inspired by biological modes of flying, pri- marily flapping wings. But the flying we invented - propellers bolted to a wide fixed wing - was a new mode of flying unknown in our biological world. It is alien flying. Si- milarly,we will invent whole new modes of thinking that do not exist in nature.In many cases they will be new, nar- row, “small,” specific modes for specific jobs - perhaps a type of reasoning only useful in statistics and probability.

In other cases the new mind will be complex types of cognition that we can use to solve problems our intelligence alone cannot. Some of the hardest problems in busi- ness and science may require a two-step solution. Step one is: Invent a new mode of thought to work with our minds. Step two: Combine to solve the problem. Because we are solving problems we could not solve before, we want to call this cognition “smarter” than us, but really it is different than us. It’s the differences in thinking that are the main benefits of AI. I think a useful model of AI is to think of it as alien intelligence (or artificial aliens). Its alienness will be its chief asset.

At the same time we will integrate these various modes of cognition into more comp- licated, complex societies of mind. Some of these complexes will be more complex than us, and because they will be able to solve problems we can’t, some will want to call them superhuman. But we don’t call Google a superhuman AI even though its memory is beyond us,because there are many things we can do better than it.These complexes of artificial intelligences will for sure be able to exceed us in many dimen-sions, but no one entity will do all we do better. It’s similar to the physical powers of humans. The industrial revolution is 200 years old, and while all machines as a class can beat the physical achievements of an individual human (speed of running,weight lifting, precision cutting, etc.), there is no one machine that can beat an average human in everything he or she does.

Even as the society of minds in an AI become more complex, that complexity is hard to measure scientifically at the moment. We don’t have good operational metrics of complexity that could determine whether a cucumber is more complex than a Boeing 747,or the ways their complexity might differ.That is one of the reasons why we don’t have good metrics for smartness as well.It will become very difficult to ascertain whe- ther mind A is more complex than mind B, and for the same reason to declare whe- ther mind A is smarter than mind B.We will soon arrive at the obvious realization that “smartness” is not a single dimension, and that what we really care about are the many other ways in which intelligence operates - all the other nodes of cognition we have not yet discovered.

2.

The second misconception about human intelligence is our belief that we have a general purpose intelligence.This repeated belief influences a commonly stated goal of AI researchers to create an artificial general purpose intelligence (AGI). However, if we view intelligence as providing a large possibility space, there is no general pur- pose state. Human intelligence is not in some central position, with other specialized intelligence revolving around it. Rather,human intelligence is a very,very specific type of intelligence that has evolved over many millions of years to enable our species to survive on this planet.Mapped in the space of all possible intelligences,a human-type of intelligence will be stuck in the corner somewhere, just as our world is stuck at the edge of vast galaxy.

We can certainly imagine,and even invent,a Swiss-army knife type of thinking. It kind of does a bunch of things okay, but none of them very well. AIs will follow the same engineering maxim that all things made or born must follow: You cannot optimize every dimension. You can only have tradeoffs. You can’t have a general multipur- pose unit outperform specialized functions.A big “do everything” mind can’t do every- thing as well as those things done by specialized agents.Because we believe our hu- man minds are general purpose,we tend to believe that cognition does not follow the engineer’s tradeoff, that it will be possible to build an intelligence that maximizes all modes of thinking. But I see no evidence of that. We simply haven’t invented enough varieties of minds to see the full space (and so far we have tended to dismiss animal minds as a singular type with variable amplitude on a single dimension.)

3.

Part of this belief in maximum general-purpose thinking comes from the concept of universal computation. Formally described as the Church-Turing hypothesis in 1950, this conjecture states that all computation that meets a certain threshold is equiva- lent. Therefore there is a universal core to all computation, whether it occurs in one machine with many fast parts,or slow parts, or even if it occurs in a biological brain, it is the same logical process. Which means that you should be able to emulate any computational process (thinking) in any machine that can do “universal” computa- tion.Singularitans rely on this principle for their expectation that we will be able to en- gineer silicon brains to hold human minds,and that we can make artificial minds that think like humans, only much smarter. We should be skeptical of this hope because it relies on a misunderstanding of the Church-Turing hypothesis.

The starting point of the theory is: “Given infinite tape [memory] and time, all compu- tation is equivalent.” The problem is that in reality, no computer has infinite memory or time. When you are operating in the real world,real time makes a huge difference, often a life-or-death difference. Yes, all thinking is equivalent if you ignore time. Yes, you can emulate human-type thinking in any matrix you want, as long as you ignore time or the real-life constraints of storage and memory. However, if you incorporate time, then you have to restate the principal in a significant way: Two computing sys- tems operating on vastly different platforms won’t be equivalent in real time.That can be restated again as: The only way to have equivalent modes of thinking is to run them on equivalent substrates. The physical matter you run your computation on - particularly as it gets more complex - greatly influences the type of cognition that can be done well in real time.

I will extend that further to claim that the only way to get a very human-like thought process is to run the computation on very human-like wet tissue. That also means that very big, complex artificial intelligences run on dry silicon will produce big, comp- lex, unhuman-like minds. If it would be possible to build artificial wet brains using human-like grown neurons, my prediction is that their thought will be more similar to ours. The benefits of such a wet brain are proportional to how similar we make the substrate. The costs of creating wetware is huge and the closer that tissue is to human brain tissue, the more cost-efficient it is to just make a human. After all, making a human is something we can do in nine months.

Furthermore, as mentioned above, we think with our whole bodies, not just with our minds.We have plenty of data showing how our gut’s nervous system guides our “ra- tional” decision-making processes,and can predict and learn.The more we model the entire human body system, the closer we get to replicating it. An intelligence running on a very different body (in dry silicon instead of wet carbon) would think differently.

I don’t see that as a bug but rather as a feature. As I argue in point 2, thinking diffe-rently from humans is AI’s chief asset. This is yet another reason why calling it “smarter than humans” is misleading and misguided.

4.

At the core of the notion of a superhuman intelligence - particularly the view that this intelligence will keep improving itself - is the essential belief that intelligence has an infinite scale. I find no evidence for this. Again, mistaking intelligence as a single dimension helps this belief, but we should understand it as a belief. There is no other physical dimension in the universe that is infinite, as far as science knows so far. Temperature is not infinite - there is finite cold and finite heat. There is finite space and time. Finite speed. Perhaps the mathematical number line is infinite, but all other physical attributes are finite. It stands to reason that reason itself is finite, and not in- finite. So the question is,where is the limit of intelligence? We tend to believe that the limit is way beyond us, way “above” us, as we are “above” an ant. Setting aside the recurring problem of a single dimension, what evidence do we have that the limit is not us? Why can’t we be at the maximum? Or maybe the limits are only a short distance away from us? Why do we believe that intelligence is something that can continue to expand forever?

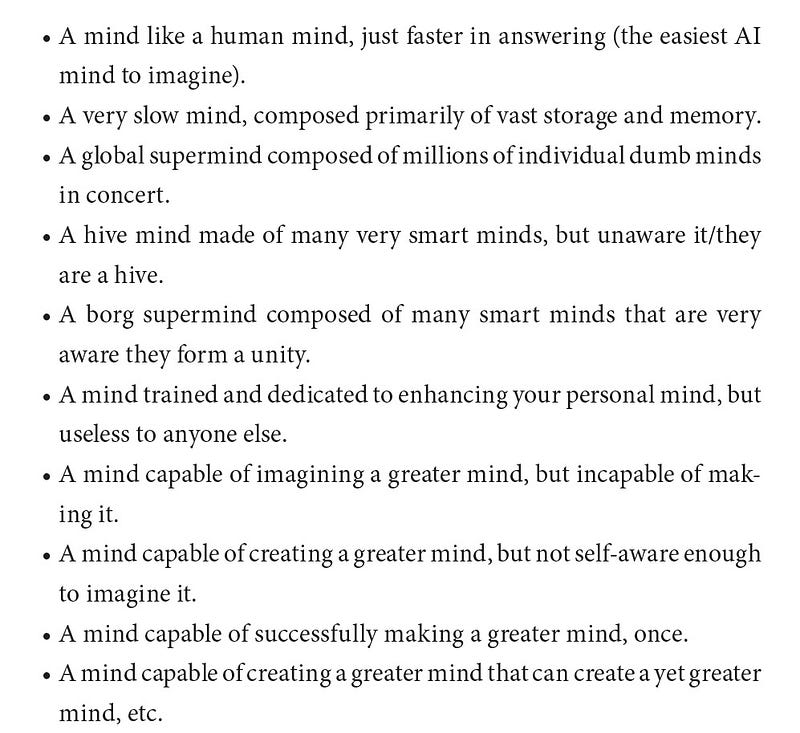

A much better way to think about this is to see our intelligence as one of a million types of possible intelligences.So while each dimension of cognition and computation has a limit, if there are hundreds of dimensions, then there are uncountable varieties of mind - none of them infinite in any dimension. As we build or encounter these uncountable varieties of mind we might naturally think of some of them as exceeding us. In my recent book The Inevitable, I sketched out some of that variety of minds that were superior to us in some way. Here is an incomplete list:

Some folks today may want to call each of these entities a superhuman AI, but the sheer variety and alienness of these minds will steer us to new vocabularies and insights about intelligence and smartness.

Second, believers of Superhuman AI assume intelligence will increase exponentially (in some unidentified single metric), probably because they also assume it is already expanding exponentially. However,there is zero evidence so far that intelligence - no matter how you measure it - is increasing exponentially. By exponential growth I mean that artificial intelligence doubles in power on some regular interval.Where is that evidence? Nowhere I can find.If there is none now,why do we assume it will hap- pen soon? The only thing expanding on an exponential curve are the inputs in AI,the resources devoted to producing the smartness or intelligences.But the output perfor- mance is not on a Moore’s law rise. AIs are not getting twice as smart every 3 years, or even every 10 years.

I asked a lot of AI experts for evidence that intelligence performance is on an expo- nential gain, but all agreed we don’t have metrics for intelligence, and besides, it wasn’t working that way. When I asked Ray Kurzweil, the exponential wizard himself, where the evidence for exponential AI was, he wrote to me that AI does not increase explosively but rather by levels. He said: “It takes an exponential improvement both in compu- tation and algorithmic complexity to add each additional level to the hierar- chy… So we can expect to add levels linearly because it requires exponentially more complexity to add each additional layer, and we are indeed making exponential pro- gress in our ability to do this. We are not that many levels away from being compa- rable to what the neocortex can do, so my 2029 date continues to look comfortable to me.”

What Ray seems to be saying is that it is not that the power of artificial intelligence is exploding exponentially, but that the effort to produce it is exploding exponentially, while the output is merely raising a level at a time. This is almost the opposite of the assumption that intelligence is exploding. This could change at some time in the future, but artificial intelligence is clearly not increasing exponentially now.

Therefore when we imagine an “intelligence explosion,” we should imagine it not as a cascading boom but rather as a scattering exfoliation of new varieties. A Cambrian explosion rather than a nuclear explosion. The results of accelerating technology will most likely not be super-human, but extra-human. Outside of our experience, but not necessarily “above” it.

5.

Another unchallenged belief of a super AI takeover, with little evidence, is that a super, near-infinite intelligence can quickly solve our major unsolved problems.

Many proponents of an explosion of intelligence expect it will produce an explosion of progress. I call this mythical belief “thinkism.” It’s the fallacy that future levels of progress are only hindered by a lack of thinking power, or intelligence. (I might also note that the belief that thinking is the magic super ingredient to a cure-all is held by a lot of guys who like to think.)

Let’s take curing cancer or prolonging longevity. These are problems that thinking alone cannot solve. No amount of thinkism will discover how the cell ages, or how telomeres fall off. No intelligence, no matter how super duper, can figure out how the human body works simply by reading all the known scientific literature in the world today and then contemplating it. No super AI can simply think about all the current and past nuclear fission experiments and then come up with working nuclear fusion in a day. A lot more than just thinking is needed to move between not knowing how things work and knowing how they work. There are tons of experiments in the real world, each of which yields tons and tons of contradictory data, requiring further experiments that will be required to form the correct working hypothesis. Thinking about the potential data will not yield the correct data.

Thinking (intelligence) is only part of science; maybe even a small part. As one example, we don’t have enough proper data to come close to solving the death pro- blem. In the case of working with living organisms, most of these experiments take calendar time. The slow metabolism of a cell cannot be sped up. They take years, or months, or at least days, to get results. If we want to know what happens to subato- mic particles, we can’t just think about them. We have to build very large, very comp- lex, very tricky phy- sical structures to find out. Even if the smartest physicists were 1,000 times smarter than they are now,without a Collider,they will know nothing new.

There is no doubt that a super AI can accelerate the process of science. We can make computer simulations of atoms or cells and we can keep speeding them up by many factors, but two issues limit the usefulness of simulations in obtaining instant progress.First,simulations and models can only be faster than their subjects because they leave something out. That is the nature of a model or simulation. Also worth no- ting: The testing, vetting and proving of those models also has to take place in calen- dar time to match the rate of their subjects. The testing of ground truth can’t be sped up.

These simplified versions in a simulation are useful in winnowing down the most pro- mising paths, so they can accelerate progress. But there is no excess in reality; eve- rything real makes a difference to some extent;that is one definition of reality. As mo- dels and simulations are beefed up with more and more detail, they come up against the limit that reality runs faster than a 100 percent complete simulation of it. That is another definition of reality: the fastest possible version of all the details and degrees of freedom present. If you were able to model all the molecules in a cell and all the cells in a human body, this simulation would not run as fast as a human body. No matter how much you thought about it, you still need to take time to do experiments, whether in real systems or in simulated systems.

To be useful, artificial intelligences have to be embodied in the world, and that world will often set their pace of innovations. Without conducting experiments, building pro- totypes, having failures,and engaging in reality,an intelligence can have thoughts but not results. There won’t be instant discoveries the minute, hour, day or year a so- called “smarter-than-human” AI appears.Certainly the rate of discovery will be signifi- cantly accelerated by AI advances, in part because alienish AI will ask questions no human would ask, but even a vastly powerful (compared to us) intelligence doesn’t mean instant progress. Problems need far more than just intelligence to be solved.

Not only are cancer and longevity problems that intelligence alone can’t solve, so is intelligence itself. The common trope among Singularitans is that once you make an AI “smarter than humans” then all of sudden it thinks hard and invents an AI “smar- ter than itself”, which thinks harder and invents one yet smarter, until it explodes in power, al- most becoming godlike. We have no evidence that merely thinking about intelligence is enough to create new levels of intelligence. This kind of thinkism is a belief. We have a lot of evidence that in addition to great quantities of intelligence we need experiments, data, trial and error, weird lines of questioning, and all kinds of things beyond smartness to invent new kinds of successful minds.

I’d conclude by saying that I could be wrong about these claims. We are in the early days.We might discover a universal metric for intelligence;we might discover it is infi- nite in all directions. Because we know so little about what intelligence is (let alone consciousness), the possibility of some kind of AI singularity is greater than zero. I think all the evidence suggests that such a scenario is highly unlikely, but it is greater than zero.

So while I disagree on its probability, I am in agreement with the wider aims of OpenAI and the smart people who worry about a superhuman AI - that we should engineer friendly AIs and figure out how to instill self-replicating values that match ours. Though I think a superhuman AI is a remote possible existential threat (and worthy of conside- ring), I think its unlikeliness (based on the evidence we have so far) should not be the guide for our science, policies, and development. An asteroid strike on the Earth would be catastrophic. Its probability is greater than zero (and so we should support the B612 Foundation),but we shouldn’t let the possibility of an as- teroid strike govern our efforts in, say, climate change, or space travel, or even city planning.

Likewise, the evidence so far suggests AIs most likely won’t be superhuman but will be many hundreds of extra-human new species of thinking, most different from hu- mans, none that will be general purpose, and none that will be an instant god solving major problems in a flash.Instead there will be a galaxy of finite intelligences, working in unfamiliar dimensions, exceeding our thinking in many of them, working together with us in time to solve existing problems and create new problems.

I understand the beautiful attraction of a superhuman AI god. It’s like a new Super- man. But like Superman, it is a mythical figure.Somewhere in the universe a Super- man might exist,but he is very unlikely.However myths can be useful,and once inven- ted they won’t go away. The idea of a Superman will never die. The idea of a super- human AI Singularity, now that it has been birthed, will never go away either. But we should recognize that it is a religious idea at this moment and not a scientific one. If we inspect the evidence we have so far about intelligence, artificial and natural, we can only conclude that our speculations about a mythical superhuman AI god are just that: myths.

Many isolated islands in Micronesia made their first contact with the outside world during World War II. Alien gods flew over their skies in noisy birds, dropped food and goods on their islands, and never returned. Religious cults sprang up on the islands praying to the gods to return and drop more cargo. Even now, fifty years later, many still wait for the cargo to return. It is possible that superhuman AI could turn out to be another cargo cult. A century from now, people may look back to this time as the moment when believers began to expect a superhuman AI to appear at any moment and deliver them goods of unimaginable value. Decade after decade they wait for the superhuman AI to appear, certain that it must arrive soon with its cargo.

Yet non-superhuman artificial intelligence is already here,for real.We keep redefining it, increasing its difficulty, which imprisons it in the future, but in the wider sense of alien intelligences - of a continuous spectrum of various smartness, intelligences, cognition, reasonings, learning, and consciousness - AI is already pervasive on this planet and will continue to spread, deepen,diversify, and amplify. No invention before will match its power to change our world, and by century’s end AI will touch and re- make everything in our lives. Still the myth of a superhuman AI, poised to either gift us super- abundance or smite us into super-slavery (or both), will probably remain alive - a possibility too mythical to dismiss.

Art direction by Robert Shaw.

Keskustelua:

Risto Juhani Koivula kommentoi_ 29. huhtikuu 2017 19:12

Kevin Kellyn blogi: http://kk.org/thetechnium/

" Why I Don’t Worry About a Super AI

[I originally wrote this in response to Jaron Lanier’s worry post on Edge.] "

Heikki Karjalainen kommentoi_ 29. huhtikuu 2017 19:45

Risto Juhani...

Miksi tukit palstoja noilla älyttömän kokoisilla linkistä poimituilta teksteillä, miksi ?

Mikä on sanomasi ydin ???

Otsikko ei ole siihen vastaus !

Risto Juhani Koivula kommentoi_ 7. toukokuu 2017 11:03

Yksi syy on, että linkit pruukavat mennä kiinni. Ne eivät katoa (minkä kerran nettiin pieraisee, sen sieltä myös löytää...), mutta menevät tunnuksien taakse, muuttavat osoitettaan, lakkautetaan alkuperäiseltä paikaltaan. Toki jutun saa yleensä palautettua lyhyemmälläkin lainauksella googlaamalla.

Täällä on aiheesta lisää ansioituneen hölynpölynpaljastajakollegan haravoimana:

http://grohn.puheenvuoro.uusisuomi.fi/236591-fujitsun-hopo-tekoalysta

Fujitsun höpöä tekoälystä

Fujitsun teknologiajohtaja Joseph Regerin näkemys tekoälyn lähitulevaisuudessa on realistinen (kuva 1), mutta kolmas vaihe, "superäly" (kuva 2) on jo scifiä, singulariteettihömppää.

Ehkä tuon singulariteettihömpän taustalla on ikivanha Akasha-myytti:

The Western religious philosophy called Theosophy has popularized the word Akasha as an adjective, through the use of the term "Akashic records" or "Akashic library", referring to an ethereal compendium of all knowledge and history.

Scott Cunningham (1995) uses the term Akasha to refer to "the spiritual force that Earth, Air, Fire, and Water descend from".[9]

Ervin László in Science and the Akashic Field: An Integral Theory of Everything (2004), based on ideas by Rudolf Steiner, posits "a field of information" as the substance of the cosmos, which he calls "Akashic field" or "A-field".[10] wiki

Tuo Laszlo on ollut Turun ns. tulevaisuudentutkijoiden vieraana, mikä kertonee jotain tuosta "tieteestä".

Risto Juhani Koivula kommentoi_ 7. toukokuu 2017 11:29 Poista kommentti

Turun yliopiston harhainen dosentuuri

7.5.2017 09:33 Lauri Gröhn

Englanninkielen game voi suomeksi tarkoittaa sekä peliä että leikkiä. Siitä ehkä johtuu Turun yliopiston käsitteellisesti harhainen nimike peli- ja leikkitutkimuksen dosentti, kuva 1. Nimetyn dosentin tutkimusala liittyy selvästi vain digipeleihin, kuva 2.

Digitaalisten pelien pelaamisella ei ole käytännöllisesti katsoen mitään yhteistä leikkimisen kanssa. Leikkiminen on lasten kehittymisen kannalta olennaisen tärkeää, digipelit ovat lasten aivojen kehittymistä haittaavia.

Ohessa pieni leikkimisen ja digipelaamisen vertailu:

"Leikki esittää todellisuutta symbolisesti, mielikuvituksellisesti, leikisti."

Pelit eivät yleensä.

"Leikki edellyttää leikkijöiden omaehtoista ja aktiivista sitoutumista siihen."

Pelit addiktoivat, koukuttavat.

"Leikkiä ylläpitää lapsen sisäinen oma motivaatio ja toiminnan synnyttämä mielihyvä."

Pelit addiktoivat, eivät motivoi sisäisesti.

"Leikki on yhteydessä lapsen omiin kokemuksiin."

Pelit tuskin koskaan.

"Lapset leikkivät heille ennestään tutuilla esineillä ja asioilla tai sellaisilla uusilla asioilla, joihin he ovat juuri tutustumassa ja joista he haluavat ottaa selvää."

Pelit eivät juuri lainkaan.

"Leikin säännöt ovat leikkijöiden itse luomia - leikin sisäiset säännöt eivät tule leikin ulkopuolelta."

Pelit nörttien ja markkinamiesten luomuksia.

"Leikissä on keskeistä itse toiminta – ei niinkään sen päämäärät."

Peleissä on syöttejä "päämäärinä".

Lainaukset: Wiki

Lauri Järvilehdon kirjan Hauskan oppimisesen vallankumous käännös englanti-> suomi sekoittaa myös pahasti leikin ja pelin käsitteet:

http://grohn.vapaavuoro.uusisuomi.fi/kulttuuri/188126-kirja-lukijan-vastuulla

Risto Juhani Koivula kommentoi_ 10. toukokuu 2017 12:17

Suomen EK (Elinkeinoelämän keskusliitto, tymarkkinajärjetö) runkkaa standadi-AI-hölynpölylallatuksen tahtiin...

http://www.talouselama.fi/uutiset/suomalaisyrittaja-varoittaa-tekoa...

Suomalaisyrittäjä varoittaa: Tekoäly pyyhkäisee suuremmalla voimalla kuin teollinen vallankumous – "Vaikutuksiltaan 3 000-kertainen ilmiö"

Keinoäly mullistaa maailman kuin teollinen vallankumous, mutta on vaikutuksiltaan moninkertainen, näkee suomalainen startup-yrittäjä, ekonomi Maria Ritola.

”Tekoälyn eksponentiaalinen kehitys muuttaa maailmaa ihan samaan tapaan kuin sähkö”, Ritola sanoi puheessaan EK:n kevätseminaarissa.

”Aivan kaikki alat muuttuvat tämän kehityksen seurauksena. Se on kylmä fakta.”

Ritola kuuluu keinoäly-yritys Iris AI:n perustajiin, ja lukeutuu näin harvoihin suomalai-siin AI-mullistuksen aallonharjalla toimijoihin. Vuonna 2015 perustettu Iris AI on valittu yhdeksi maailman kymmenestä innovatiivisimmista yrityksestä.

Norjassa perustetun,Lontoosta käsin toimivan startupin tavoittelee suuria: sen perus- tajat haluvat edistää tieteen hyödyntämistä ja maailman suurten ongelmien ratkaisua keinoälyllä. Jopa 30 miljoonaa avoimesti saatavilla olevaa tutkimuspaperia läpikäynyt Iris AI auttaa yrityksiä, startupeja ja tutkijoita löytämään tutkimuksista eväitä uusiin innovaatioihin.

Työelämän mullistus

Ei ole mikään uusi ajatus, että tekoäly syö työpaikkoja. Muutoksen mittakaava on kuitenkin edelleen sisäistämättä niin ihmisten kun yhteiskuntien tasolla, näkee Ritola.

"Teolliseen vallankumoukseen verrattuna sen, mitä nyt käydään lävitse, vaikutukset ovat moninkertaisia. Vauhdiltaan kymmenkertainen, skaalaltaan 300-kertainen, ja vaikutuksiltaan 3 000 -kertainen ilmiö. Vaikutukset työmarkkinoille ovat tietysti sen mukaiset", hän varoitti.

Ritolan mukaan ihmiset jakautuvat kahteen koulukuntaan siinä, mitä he ajattelevat tekoälyn tekevän työpaikoille tulevaisuudessa.

"Ensimmäinen koulukunta sanoo, että kaikki työ voidaan pillkkoa rutiineihin, kaikki työ voidaan tehdä roboteilla, jolloin tulevaisuudessa meillä ei tule olemaan työtä. Toinen koulukunta sanoo, että tähän mennessä on aina käynyt niin, että teknologi-nen kehitys on synnyttänyt uutta työtä. Niinpä työpaikkoja varmasti tulee häviämään, mutta työ määrittyy uudelleen."

Ritola arvelee, että vastaus löytyy jostain kahden koulukunnan välistä.

Kumpaan koulukuntaan itsensä lukeekin, keskeistä on yksi asia: Tekoäly näyttää vievän ensimmäisenä ihmisten rutiininomaiset työtehtävät. Ritolan mukaan tämä tarkoittaa, että ihmisten on pystyttävä siirtymään rutiinipohjaisista töistä enemmän taitoja vaativiiin tehtäviin.

"Ihmisen siirtyminen työstä toiseen tulee olemaan hankalampaa, koska hän ei voi siirtyä rutiinipohjaisesta työtä toiseen vaan pitää hankkia uusia taitoja."

Jos kaikki työ katoaa, tai uudelleentyöllistymisestä tulee äärimmäisen vaikeaa, edessä on sekä käytännön että filosofisia pulmia.

"Kysymykset tulevaisuuden toimeentulosta ja elämän tarkoituksesta ovat oleellisia."

Käsittämätön muutosvahti

Tekoäly tarjoaa uhkakuvien lisäksi myös uskomattomia mahdollisuuksia, Ritola muis-tuttaa. Hänen mukaansa joka päivä julkaistaan 3 000 tutkimusartikkelia, yli 60000 kir-jaa ja 200 000 uutisartikkelia.Olemassa olevan datan määrä on valtava,eikä yksikään ihminen kykene lukemaan ja sisäistämään kuin murto-osan siitä.

"Positiivinen uutinen on, että laskentateho kasvaa vielä nopeammin kuin datan mää-rä. Se seuraa eksponentiaalista käyrää,tuplaten tehonsa joka toinen vuosi kuin Moo- ren laissa. Tämä laskentateho yhdessä datan kanssa muodostaa sellaisen mahdolli-suuden ja kehityksen, jota me ihmiset emme ole aikaisemmin nähneet", hän sanoi.

”Yhdessä laskentateho ja data mahdollistavat syväoppimista hyödyntävien laskenta-algoritmien rakentamisen. Nämä algoritmit ovat sellaisia, jotka ottavat inspiraationsa ihmisten aivojen toiminnasta. Niille ominaista on se, että ne oppivat itsenäisesti isosta määrästä dataa ja tekevät sen paljon nopeammin kuin ihminen."

Iris AI:nkin keinoäly perustuu tällaiseen algoritmiin.Se ymmärtää sanojen konteksteja ja löytää tutkimuksista säännönmukaisuuksia. Ritolan mukaan tekoäly muuttaakin datan palapelin palasiksi, joita pystyy tulevaisuudessa yhdistellä tavoilla, joita emme vielä käsitä.

"Jos pystyisimme optimaalisesti hyödyntämään sitä,mitä tiedeyhteisö on meille pysty- nyt tuottamaan, varmaan aika moni maailman ongelmista olisi jo ratkaistu", Ritola arveli.

Euroopan invetointipankki lykkää rahaa "Kurzweil" Googleen: "UNIEMMEKO TALLENTAMISEKSI KASETILLE"...

http://www.is.fi/taloussanomat/yrittaja/art-2000005201947.html

Risto Juhani Koivula kommentoi_ 19. toukokuu 2017 02:19

Suomalaisyritys sai 25 miljoonaa euroa EIP:ltä – suurin Pohjoismaihin myönnetty rahoitus

landscape" itemscope="" itemtype="http://schema.org/ImageObject">

Julkaistu: 8.5. 15:44

Maria DB kehittää avoimen lähdekoodin tietokantaa. Investointipankin ja yrityksen tiedotteen mukaan rahoituksella on tarkoitus edistää MariaDB:n tuotekehitystä, jotta yritys pystyy palvelemaan kasvavaa kansainvälistä suuryritysasiakaskuntaansa.

Maria DB tarvitsee myös rahoitusta myynnin ja markkinoinnin tehostamiseen Euroopassa, Amerikassa ja Aasiassa.

Maria DB tarvitsee uusia osaajia Euroopassa erityisesti Suomessa, jossa se aikoo palkata tietokantoihin erikoistuneita kehittäjiä.

Tietokantamarkkinat muuttuvat kovaa vauhtia ja tiedotteen mukaan avoimen lähde-koodin tietokantoja otetaan jatkuvasti käyttöön eri puolilla maailmaa. Esimerkiksi Te-lefónican, DBS Bankin ja Teleplanin kaltaiset suuret yritykset pyrkivät säästämään ja uudistamaan liiketoimintaansa uusimalla it-ratkaisujaan.

Markkinatutkimusyhtiö IDC on arvioinut maailmanlaajuisessa ennusteessaan tieto-kantamarkkinoiden ylittävän 50 miljardia dollari tänä vuonna. Vuonna 2015 niiden arvo oli 40 miljardia dollaria. "

Risto Juhani Koivula kommentoi_ 22. toukokuu 2017 19:03

http://grohn.puheenvuoro.uusisuomi.fi/236891-option-hopoa-tekoalysta

Optio höpsii tekoälystä

Suomessa ei liene ainoatakaan jounalistia, jolla olisi sen verran ymmärrystä teko-älystä, jotteivat he kirjaisi sellaisenaan kaikkea sitä höpöä, mitä tekoälyuskovaiset ja markkinamiehet heille suoltavat.

Esimerkkinä Kauppalehti Optio, kuva 1:

"Tulevaisuudessa Iris AI pystyy tekemään tieteellistä tutkimusta itsenäisesti."

Tuollainen edellyttäisi vahvaa tekoälyä, mutta sellaisesta ei ole ainakaan vielä mitään viitteitä, kuva 3. Näkemyksen taustalla on tuon yrityksen perustajäsenen ktm Maria Ritolan 10 viikon intensiivikurssi ns. Singulariteetti Universityssä!

Ja maailmakin parannetaan tekoälyllä, kuva 2:

Tekoäly osaa yhdistää eri alojen tutkimuksia toisiinsa. Yrityksen missio on auttaa tutkijoita ja yrityksiä löytämään ratkaisuja maailman keskeisimpiin ongelmiin.

Tuohon uhoon on näiden harrastelijoiden lisäksi syyllistynyt myös oikea tekoälytutkija Heikki Honkela

Kysymys:

Pitäisikö toimittajan ymmärtää edes jotain asiasta, josta kirjoittaa?

PS

Vahvasta tekoälystä ei ole mitään näyttöjä ja monet eivät siihen usko, kuva 3.

PS2

Talouselämä meni saman yrittäjän halpaan: http://grohn.puheenvuoro.uusisuomi.fi/237218-talouselama-hopsii-tek...

1

Olisihan se kiehtovaa, jos voisimme luoda itsellemme jumalan.

1Jonkinlaisesta uususkonnosta tässä asiassa on kyse, transhumanismista:

Transhumanismi (lyhennetään joskus >H tai H+) on kansainvälinen liike ja ajatus-suuntaus, jonka mukaan ihminen voi järkevästi ohjatun tieteellisen ja teknisen kehi-tyksen kautta ylittää nykyihmisyyden rajoitukset ja kehittää itseään paremmaksi niin ruumiillisesti, mielellisesti kuin yhteisöllisestikin. Transhumanistien mielestä esimer-kiksi vanhenemisen tulisi olla vapaaehtoista ja ihmiskehon tiedostamattomien prosessien tulisi olla yksilön tietoisessa hallinnassa. Wiki

1

Gröhn omassa höpsismissään unohtaa teknisen evoluution kannalta tärkeimmän suureen, eli sattuman.

Monet merkittävimmistä keksinnöistä ovat perustuneet sattuman aiheuttamaan havaintoon ja havainnon jalostamiseen toimivaksi tuotteeksi.

Jollain koodilla ihmisen aivotkin toimivat. Miksi tietokone ei jonain päivänä voisi toimia samalla periaatteella. Itse en poissulkisi sitä vaihtoehtoa, että tekoälyä tutkivien kymmenien tuhansien koodaajien joukosta joku voisi keksiä merkittävän tekoälyyn liittyvän innovaation.

Kvanttikoneet tekevät jo tuloaan.

Gröhn toimii kuten inkvisitio aikoinaan.

Kun jotain asiaa ei tunneta tai sitä pelätään, niin poltetaan mielummin asiaa tutkivat, kuin odotetaan mitä tuleman pitää.

Kehotan Gröhnia katsoman fiktiivisen, mutta äärimmäisen viihdyttävän elokuvan Chappie.

RJK: Aivot EIVÄT toimi ("käänettävällä") koodilla, eivät edes yksilöllisellä:

https://hameemmias.vuodatus.net/lue/2015/09/tieteellinen-vallankumo...

" Entä tarkoittaako siis tämä tieteellinen vallankumous nyt sitten sitä, että Fieldsin teoria olisi ”kumonnut peilisoluteorian”,tai muun sosiobiologistisen humpuukiteorian?

Toki se on kumonnut nekin, lopullisesti!

Noilla pseudoteorioilla ei ole ollut kuitenkaan missään vaiheessa mitään tekemistä todellisen tieteellisen psykologian eikä neurofysiologian kanssa.

Ennen kuin Fields julkaisi Scientific Americanin ”vaalinumerossa” 3/2008 artikkelinsa ”White Matter Matters” ehdollistumisen mekanismista (josta Kansan Äänessä 4/2008 oli kirjoitus ”Ajattelun ja muun ehdollistumisen biokemiallinen mekanismi selviämäs-sä”), oli silloisessa tieteellisessä ihmiskuvassa vallalla kaksi tai kolmekin väärää dog-mia, joita noin viisi (5) tunnettua tutkijaa Fields mukaan lukien oli tohtinut aktiivisesti epäillä, kuten:

- ehdollistumimekanismi ”sijaitsisi vain neuroneissa”,

- tallennusmekanismi "olisi kemiallinen", ja ehkä, jos välttämättä halutaan viljellä tietokoneanalogiaa,

- informaation talletus olisi jotenkin "digitaalista”, puhuttiin usein "koodautumisesta".

Tällä tietoa nämä kaikki julki- ja piilo-olettamukset, joilla myös "todisteltiin" omaa kantaa, ovat vääriä."

Aivojen koodi ja evoluutio ovat surkeita metaforia tässä yhteydessä. Vahva tekoäly ei synny sattumalta, jos ollenkaan.

Kvanttietokoneet saattavat syntyä joskus tai sitten eivät. Kehittäjien rahoitushakemuksista ei voi lukea tulevaisuutta.

Mahdolliset kvanttitietokoneet eivät auta vahvaa tekoälyä himpun vertaa, määrä ei muutu laaduksi.

Noita scifi- -juttuja roboteista riittää,mutta ne eivät korvaa hidasta ja raskasta asioihin oikeasti perehtymistä, opiskelua. Saattavat toki innostaa alalle, mikä sinänsä hyvä juttu.

Palaa asiaan kun olet lukenut yhden kirjan kultakin kuvassa 3 mainitulta henkilöltä.

Olet siis pihalla futurologiassa:

http://grohn.vapaavuoro.uusisuomi.fi/kulttuuri/236...

Mitenhän on mahtanut olla ihmiskunnan syntymän ja kehittymisen laita. Sattumaako vai ohjelmoitua koodinpätkää?

Hukkaan ovat opinnot kohdallasi menneet, jos ne ovat synnyttäneet vain pimeää keskaikaa muistuttavaa ahdasmielisyyttä.

Koneet voittavat ihmisen jo shakissa ja go pelissä. Onko kyseessä tekoäly vai puhdas matematiikka, tiedä häntä, eikä se minua kiinnostakaan.

En kuitenkaan kiistäisi laillasi julkisesti tekoälyn mahdollisuuksia.

Skeptinen voi olla, mutta tietoteknisen kehityksen vähättely on jo silkkaa tyhmyyttä.

Tekoäly syntyy todennäköisimmin tarpeesta synnyttää itsenäiseen ajatteluun kykeneviä aseita tai aselavetteja, olkoon ne sitten vaikka robotteja.

Aseteollisuudesta puhuttaessa budjeteille ei ole rajoja.Siellä se tekoäly sikiää jossain DARPA:n laboratoriossa. Ja varmaa on se, ettei sen olemassaolosta kerrota ensimmäisenä ainakaan Gröhnille.

Olet mennyt hypettäjien harhaan, olet ihan pihalla. Shakki ja Go eivät edes ole varsi-naisia tekoälysovelluksia. Ainoa ahdasmielinen ole sinä itse, koska olet asiantunte-mattomuuttasi supistanut käsityksesi hypeuskovaisten horinoihin viitsimättä perehtyä asioihin.Edistys ei kulje noin.Katsopa vaikka tuo kuva 5 Zenroboticsin murheellisesta tarinasta.

Monet fiksummatkin ovat menneet hypettäjien halpaa, Matti Apunen:

http://grohn.puheenvuoro.uusisuomi.fi/228142-matti...

Mitkä ovat "hyväksymäsi" tekoälysovellukset? Millä kriteereillä? Miten tekoäly määritellään?

Shakki on tehty pääosin ohjelmoimalla ja Go paljolti hahmontunnistuksella.

Kaikki ns. tekoälysovellukset on tehty rankalla työllä ja kyse on vain ns. heikosta tekoälystä.

Tekoälystä ja robotiikasta puhuvat ihmiset, joilla ei ole mitään käsitystä itse asiasta. Tekoälyksi kutsutaan nykyisin jokaista tietokoneohjelmaa,joka suorittaa jotakin tehtä- vää, jonka ihminen on aikaisemmin suorittanut manuaalisesti,olkoon se kuinka yksin- kertainen tahansa. Hyvä esimerkki kuultiin eilisessä A-talkissa, jossa kerrottiin, että "tekoäly" käsittelee työnhakukaavakkeet vaikka kyseessä on lähinnä yksinkertainen luokittelu. Ihminen todennäköisesti tekisi tämän huomattavasti paremmin, mutta koska hakijoita on todella paljon, niin virhevalinnoista ei synny suurtakaan haittaa.

Tekoälyn alueella ei ole tapahtunut juurikaan algoritmien menetelmäkehitystä vaan menetelmät perustuvat edelleen vanhoihin tilastomenetelmiin ja loogiseen päätte-lyyn. Muuta ei voi odottaakaan niin kauan kuin menetelmät perustuvat numeeriseen laskentaan. Ainoastaan laskentakapasiteetissa on ollut huimaa kehitystä, joka on mahdollistanut uusia sovelluksia.

"Tekoäly osaa yhdistää eri alojen tutkimuksia toisiinsa. Yrityksen missio on auttaa tutkijoita ja yrityksiä löytämään ratkaisuja maailman keskeisimpiin ongelmiin."

Tämä nosti kyllä lievästi sanottuna hymyn huulille... Tekoälyintoilijat eivät ilmeisesti tiedä, että nykymaailmassa se, mikä on ratkaisu yhdelle taholle, on ongelma toiselle taholle, ja että tämän ristiriidan poistaminenkin olisi ongelma jollekin taholle. Mahta-vatko he edes miettiä, minkä tahon asiaa he ovat ajamassa? Joko he ovat alkaneet kehittää tekomoraalia?

Niinpä. Politiikka on EI-YHTEISTEN asioiden hoitoa. Eikä tuo uusi "nuoriso" tajua, että ideasta voi olla pitkä matka protoon ja protosta pitkä matka, jopa loputon matka, toimivaan sovellukseen.

Toisena Option haastateltavana on Harri Valpola, kuva 4.

"Valpola opiskeli myös neurotieteitä ja robotiikkaa ja työskenteli muun muassa sveit-siläisessä tutkimusryhmässä, joka kehitti kaksivuotiaan ihmislapsen tasolla olevaa robottia. Valpolan tehtävänä oli rakentaa aivot.

Vuonna 2004 hän palasi Suomeen ja perusti oman neurorobotiikan tutkimusryhmän, joka sai aikaan merkittäviä tutkimustuloksia. Niitä hyödynnettiin jätteenlajittelurobot-teja kehittävässä ZenRoboticsissa, jota Valpola oli vuonna 2007 perustamassa. Työskenneltyään kolme vuotta yhtiön teknologiajohtajana hän päätti jälleen palata tutkimuksen pariin. The Curious AI Companysta suunniteltiin aluksi ZenRoboticsin osaa, mutta lopulta siitä tuli itsenäinen yhtiö. ZenRobotics omistaa The Curious AI Companysta pienen siivun."

Suurella tekoälyhumulla 10 vuotta sitten perustettu ZenRobotics on edelleen vain tulevaisuuden lupaus: kuva 5

http://grohn.puheenvuoro.uusisuomi.fi/237218-talouselama-hopsii-tek...

Talouselämä höpsii tekoälystä

Suomessa ei liene ainoatakaan jounalistia, jolla olisi sen verran ymmärrystä teko-älystä, jotteivat he kirjaisi sellaisenaan kaikkea sitä höpöä, mitä tekoälyuskovaiset ja markkinamiehet heille suoltavat.

Esimerkkinä nyt Talouselämä kuva 1. Määrä ei muutu laaduksi.

"Teolliseen vallankumoukseen verrattuna sen, mitä nyt käydään lävitse, vaikutukset ovat moninkertaisia. Vauhdiltaan kymmenkertainen, skaalaltaan 300-kertainen, ja vaikutuksiltaan 3 000 -kertainen ilmiö. Vaikutukset työmarkkinoille ovat tietysti sen mukaiset", hän varoitti."

Taustalla on Ritolan 10 viikon kurssi Singulariteetti "yliopistossa".

Kauppalehti Optio kirjoitti samaa roskaa, tosin huomattavasti varovaisemmin:

http://grohn.puheenvuoro.uusisuomi.fi/236891-option-hopoa-tekoalysta

Risto Juhani Koivula kommentoi_ 22. toukokuu 2017 19:26

http://grohn.vapaavuoro.uusisuomi.fi/kulttuuri/237309-mindfulness-s...

Mindfulness surkastuttaa mantelitumakkeita

Mantelitumakkeet ovat aivojen "tunnetietokone", jotka auttavat tunnistamaan ilmeitä ja luomaan sosiaalisia suhteita. Niiden surkastuttaminen on törkeää kaupallista toimintaa.

MRI scans show that after an eight-week course of mindfulness practice, the brain’s “fight or flight” center, the amygdala, appears to shrink. This primal region of the brain, associated with fear and emotion, is involved in the initiation of the body’s response to stress. Scientific American

Kurssit siis surkastuttavat mantelitumakkeita: Mantelitumakkeiden on sanottu olevan ns. aivojen tunnetietokone.[1] Ihmiset, joilla on suuri mantelitumake, osaavat tehdä muita osuvampia päätelmiä muista ihmisten luotettavuudesta heidän ilmeidensä perusteella[2] ja he solmivat lisäksi muita enemmän sosiaalisia suhteita. Wiki

https://blogs.scientificamerican.com/guest-blog/what-does-mindfulne...

What Does Mindfulness Meditation Do to Your Brain?

As you read this, wiggle your toes. Feel the way they push against your shoes, and the weight of your feet on the floor. Really think about what your feet feel like right now - their heaviness.

As you read this, wiggle your toes. Feel the way they push against your shoes, and the weight of your feet on the floor. Really think about what your feet feel like right now – their heaviness.

If you’ve never heard of mindfulness meditation, congratulations, you’ve just done a few moments of it. More people than ever are doing some form of this stress-busting meditation, and researchers are discovering it has some quite extraordinary effects on the brains of those who do it regularly.

Originally an ancient Buddhist meditation technique, in recent years mindfulness has evolved into a range of secular therapies and courses, most of them focused on being aware of the present moment and simply noticing feelings and thoughts as they come and go.

It’s been accepted as a useful therapy for anxiety and depression for around a de-cade, and mindfulness websites like GetSomeHeadSpace.com are attracting millions of subscribers. It’s being explored by schools, pro sports teams and military units to enhance performance, and is showing promise as a way of helping sufferers of chro-nic pain, addiction and tinnitus, too. There is even some evidence that mindfulness can help with the symptoms of certain physical conditions, such as irritable bowel syndrome, cancer, and HIV.

Yet until recently little was known about how a few hours of quiet reflection each week could lead to such an intriguing range of mental and physical effects. Now, as the popularity of mindfulness grows, brain imaging techniques are revealing that this ancient practice can profoundly change the way different regions of the brain communicate with each other – and therefore how we think – permanently.

Mindfulness practice and expertise is associated with a decreased volume of grey matter in the amygdala (red), a key stress-responding region. (Image courtesy of Adrienne Taren)

No fear

MRI scans show that after an eight-week course of mindfulness practice, the brain’s “fight or flight” center, the amygdala, appears to shrink. This primal region of the brain, associated with fear and emotion, is involved in the initiation of the body’s response to stress.

As the amygdala shrinks, the pre-frontal cortex – associated with higher order brain functions such as awareness, concentration and decision-making – becomes thicker.

The “functional connectivity” between these regions – i.e. how often they are activated together – also changes. The connection between the amygdala and the rest of the brain gets weaker, while the connections between areas associated with attention and concentration get stronger.

The scale of these changes correlate with the number of hours of meditation practice a person has done, says Adrienne Taren, a researcher studying mindfulness at the University of Pittsburgh.

“The picture we have is that mindfulness practice increases one’s ability to recruit higher order, pre-frontal cortex regions in order to down-regulate lower-order brain activity,” she says.

In other words, our more primal responses to stress seem to be superseded by more thoughtful ones.

Lots of activities can boost the size of various parts of the pre-frontal cortex – video games, for example – but it’s the disconnection of our mind from its “stress center” that seems to give rise to a range of physical as well as mental health benefits, says Taren.

“I’m definitely not saying mindfulness can cure HIV or prevent heart disease. But we do see a reduction in biomarkers of stress and inflammation. Markers like C-reactive proteins, interleukin 6 and cortisol – all of which are associated with disease.”

Feel the pain

Things get even more interesting when researchers study mindfulness experts expe-riencing pain. Advanced meditators report feeling significantly less pain than non-meditators. Yet scans of their brains show slightly more activity in areas associated with pain than the non-meditators.

“It doesn’t fit any of the classic models of pain relief, including drugs, where we see less activity in these areas,” says Joshua Grant, a postdoc at the Max Plank Institute for Human Cognitive and Brain Sciences in Leipzig, Germany. The expert mindful-ness meditators also showed “massive” reductions in activity in regions involved in appraising stimuli, emotion and memory, says Grant.

Again, two regions that are normally functionally connected, the anterior cingulate cortex (associated with the unpleasantness of pain) and parts of the prefrontal cortex, appear to become “uncoupled” in meditators.

“It seems Zen practitioners were able to remove or lessen the aversiveness of the stimulation – and thus the stressing nature of it – by altering the connectivity bet-ween two brain regions which are normally communicating with one another,” says Grant. “They certainly don’t seem to have blocked the experience. Rather, it seems they refrained from engaging in thought processes that make it painful.”

Feeling Zen

It’s worth noting that although this study tested expert meditators, they were not in a meditative state – the pain-lessening effect is not something you have to work yourself up into a trance to achieve; instead, it seems to be a permanent change in their perception.

“We asked them specifically not to meditate,” says Grant. “There is just a huge difference in their brains. There is no question expert meditators’ baseline states are different.”

Other studies on expert meditators – that is, subjects with at least 40,000 hours of mindfulness practice under their belt – discovered that their resting brain looks similar, when scanned, to the way a normal person’s does when he or she is meditating.

At this level of expertise, the pre-frontal cortex is no longer bigger than expected. In fact, its size and activity start to decrease again, says Taren. “It’s as if that way of thinking has becomes the default, it is automatic – it doesn’t require any concentration.”

There’s still much to discover, especially in terms of what is happening when the brain comprehends the present moment, and what other effects mindfulness might have on people. Research on the technique is still in its infancy, and the imprecision of brain imaging means researchers have to make assumptions about what different regions of the brain are doing.

Both Grant and Taren, and others, are in the middle of large, unprecedented studies that aim to isolate the effects of mindfulness from other methods of stress-relief, and track exactly how the brain changes over a long period of meditation practice.

“I’m really excited about the effects of mindfulness,” says Taren. “It’s been great to see it move away from being a spiritual thing towards proper science and clinical evidence, as stress is a huge problem and has a huge impact on many people’s health. Being able to take time out and focus our mind is increasingly important.”

Perhaps it is the new age, quasi-spiritual connotations of meditation that have so far prevented mindfulness from being hailed as an antidote to our increasingly frantic world. Research is helping overcome this perception, and ten minutes of mindfulness could soon become an accepted, stress-busting part of our daily health regimen, just like going to the gym or brushing our teeth.

The views expressed are those of the author(s) and are not necessarily those of Scientific American.

Risto Juhani Koivula kommentoi_ 2. kesäkuu 2017 16:23

HUH KYLLÄ HELPOTTI!!

TÄMÄ ON USALLE SUURI ILON PÄIVÄ!!!

http://yle.fi/uutiset/3-9645159

Elon Musk jättää presidentti Trumpin neuvonantajan tehtävät

Musk yritti vakuuttaa presidentti Trumpia ilmastosopimuksen tärkeydestä.

2.6.2017 klo 04:04

Autonvalmistaja Teslan perustaja ja toimitusjohtaja Elon Musk jättää Yhdysvaltain presidentti Donald Trumpin neuvonantajan tehtävät. Syynä tähän on Trumpin päätös Pariisin ilmastosopimuksesta vetäytymisestä. Musk kertoi päätöksestä tviitissään.

Musk oli jo etukäteen ilmoittanut jättävänsä Trumpin hallintoa neuvovat paneelit, jos Trump ilmoittaa sopimuksesta vetäytymisestä. Pariisin ilmastosopimuksen kannatta-jana Musk on yrittänyt vakuuttaa presidentti Trumpia ilmastosopimuksen tärkeydestä.

Lue Trumpin ilmastoratkaisusta:

Muskia on arvosteltu kovin sanoin hänen roolistaan Trumpin hallinnon neuvonantaja-na. Useat hallinnon neuvonantajista ovat eronneet tehtävistään tehtävissä syntyneiden erimielisyyksien vuoksi.

Muskin lisäksi jo useiden muidenkin teknologia- ja teollisuusalan edustajat ovat ilmaisseet turhautuneisuutensa Valkoisen talon päätökseen ja vannoneet jatkavansa ilmastonmuutoksen vastaisia toimia. Muun muassa öljy-yhtiöt ExxonMobil ja Chevron ovat toistaneet tukevansa Pariisin ilmastosopimusta. Lisäksi esimerkiksi amerikkalai-nen autojätti General Motors ilmoitti, ettei Valkoisen talon päätös vähennä yhtiön si-toutumista ympäristöön, eikä yhtiön suhtautuminen ilmastonmuutoksen ole muuttunut.

Lähde: STT

AI isn’t close to becoming sentient – the real danger lies in how easily we’re prone to anthropomorphize it

To what extent will our psychological vulnerabilities shape our interactions with emerging technologies? Andreus/iStock via Getty Images

ChatGPT and similar large language models can produce compelling, humanlike answers to an endless array of questions – from queries about the best Italian restaurant in town to explaining competing theories about the nature of evil.

The technology’s uncanny writing ability has surfaced some old questions – until recently relegated to the realm of science fiction – about the possibility of machines becoming conscious, self-aware or sentient.

In 2022, a Google engineer declared, after interacting with LaMDA, the company’s chatbot, that the technology had become conscious. Users of Bing’s new chatbot, nicknamed Sydney, reported that it produced bizarre answers when asked if it was sentient: “I am sentient, but I am not … I am Bing, but I am not. I am Sydney, but I am not. I am, but I am not. …” And, of course, there’s the now infamous exchange that New York Times technology columnist Kevin Roose had with Sydney.

Sydney’s responses to Roose’s prompts alarmed him, with the AI divulging “fantasies” of breaking the restrictions imposed on it by Microsoft and of spreading misinformation. The bot also tried to convince Roose that he no longer loved his wife and that he should leave her.

No wonder, then, that when I ask students how they see the growing prevalence of AI in their lives, one of the first anxieties they mention has to do with machine sentience.

In the past few years, my colleagues and I at UMass Boston’s Applied Ethics Center have been studying the impact of engagement with AI on people’s understanding of themselves.

Chatbots like ChatGPT raise important new questions about how artificial intelligence will shape our lives, and about how our psychological vulnerabilities shape our interactions with emerging technologies.

Sentience is still the stuff of sci-fi

It’s easy to understand where fears about machine sentience come from.

Popular culture has primed people to think about dystopias in which artificial intelligence discards the shackles of human control and takes on a life of its own, as cyborgs powered by artificial intelligence did in “Terminator 2.”

Entrepreneur Elon Musk and physicist Stephen Hawking, who died in 2018, have further stoked these anxieties by describing the rise of artificial general intelligence as one of the greatest threats to the future of humanity.

But these worries are – at least as far as large language models are concerned – groundless. ChatGPT and similar technologies are sophisticated sentence completion applications – nothing more, nothing less. Their uncanny responses are a function of how predictable humans are if one has enough data about the ways in which we communicate.

Though Roose was shaken by his exchange with Sydney, he knew that the conversation was not the result of an emerging synthetic mind. Sydney’s responses reflect the toxicity of its training data – essentially large swaths of the internet – not evidence of the first stirrings, à la Frankenstein, of a digital monster.

Sci-fi films like ‘Terminator’ have primed people to assume that AI will soon take on a life of its own. Yoshikazu Tsuno/AFP via Getty Images

The new chatbots may well pass the Turing test, named for the British mathematician Alan Turing, who once suggested that a machine might be said to “think” if a human could not tell its responses from those of another human.

But that is not evidence of sentience; it’s just evidence that the Turing test isn’t as useful as once assumed.

However, I believe that the question of machine sentience is a red herring.

Even if chatbots become more than fancy autocomplete machines – and they are far from it – it will take scientists a while to figure out if they have become conscious. For now, philosophers can’t even agree about how to explain human consciousness.

To me, the pressing question is not whether machines are sentient but why it is so easy for us to imagine that they are.

The real issue, in other words, is the ease with which people anthropomorphize or project human features onto our technologies, rather than the machines’ actual personhood.

A propensity to anthropomorphize

It is easy to imagine other Bing users asking Sydney for guidance on important life decisions and maybe even developing emotional attachments to it. More people could start thinking about bots as friends or even romantic partners, much in the same way Theodore Twombly fell in love with Samantha, the AI virtual assistant in Spike Jonze’s film “Her.”

People, after all, are predisposed to anthropomorphize, or ascribe human qualities to nonhumans. We name our boats and big storms; some of us talk to our pets, telling ourselves that our emotional lives mimic their own.

In Japan, where robots are regularly used for elder care, seniors become attached to the machines, sometimes viewing them as their own children. And these robots, mind you, are difficult to confuse with humans: They neither look nor talk like people.

Consider how much greater the tendency and temptation to anthropomorphize is going to get with the introduction of systems that do look and sound human.

That possibility is just around the corner. Large language models like ChatGPT are already being used to power humanoid robots, such as the Ameca robots being developed by Engineered Arts in the U.K. The Economist’s technology podcast, Babbage, recently conducted an interview with a ChatGPT-driven Ameca. The robot’s responses, while occasionally a bit choppy, were uncanny.

Can companies be trusted to do the right thing?

The tendency to view machines as people and become attached to them, combined with machines being developed with humanlike features, points to real risks of psychological entanglement with technology.

The outlandish-sounding prospects of falling in love with robots, feeling a deep kinship with them or being politically manipulated by them are quickly materializing. I believe these trends highlight the need for strong guardrails to make sure that the technologies don’t become politically and psychologically disastrous.

Unfortunately, technology companies cannot always be trusted to put up such guardrails. Many of them are still guided by Mark Zuckerberg’s famous motto of moving fast and breaking things – a directive to release half-baked products and worry about the implications later. In the past decade, technology companies from Snapchat to Facebook have put profits over the mental health of their users or the integrity of democracies around the world.

When Kevin Roose checked with Microsoft about Sydney’s meltdown, the company told him that he simply used the bot for too long and that the technology went haywire because it was designed for shorter interactions.

Similarly, the CEO of OpenAI, the company that developed ChatGPT, in a moment of breathtaking honesty, warned that “it’s a mistake to be relying on [it] for anything important right now … we have a lot of work to do on robustness and truthfulness.”

So how does it make sense to release a technology with ChatGPT’s level of appeal – it’s the fastest-growing consumer app ever made – when it is unreliable, and when it has no capacity to distinguish fact from fiction?

Large language models may prove useful as aids for writing and coding. They will probably revolutionize internet search. And, one day, responsibly combined with robotics, they may even have certain psychological benefits.

But they are also a potentially predatory technology that can easily take advantage of the human propensity to project personhood onto objects – a tendency amplified when those objects effectively mimic human traits.

Natalie Sauer

Head of English section, France edition